Introduction to Kubernetes: Definition and Overview

Kubernetes is an open-source container orchestration platform that automates the deployment, scaling, and management of containerized applications. It provides a robust and scalable infrastructure for running applications in a distributed environment.

The importance of Kubernetes cannot be overstated. It enables developers to focus on writing code without worrying about the underlying infrastructure.

With Kubernetes, you can easily deploy and manage applications across multiple hosts, making it easier to scale and update your applications as needed.

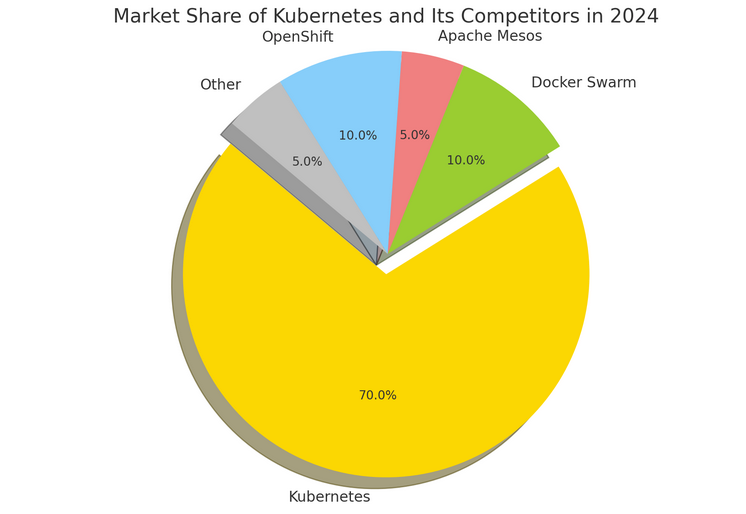

Kubernetes has evolved significantly since its inception in 2014. Initially developed by Google, it was designed to manage their massive containerized workloads. Later, it was donated to the Cloud Native Computing Foundation (CNCF), which now oversees its development and maintenance. Today, Kubernetes is widely adopted by organizations of all sizes and is the de facto standard for container orchestration.

Core Concepts and Components

To understand Kubernetes, it’s essential to grasp its core concepts and components. At the heart of Kubernetes is the cluster, which consists of a set of nodes. Nodes are the individual machines that run your applications. Within each node, you have pods, which are the smallest deployable units in Kubernetes. A pod can contain one or more containers that share network and storage resources.

Deployments and services are two critical components that work together in Kubernetes. Deployments define the desired state of your application, including the number of replicas and the container image to use. Services, on the other hand, provide a stable network endpoint for accessing your application, even as pods are created or terminated.

The control plane, comprising various components like the API server, scheduler, and controller manager, is responsible for managing the cluster’s overall state. Worker nodes, on the other hand, execute the tasks assigned to them by the control plane.

Volumes and persistent storage are essential for managing data in Kubernetes. Volumes provide a way to store data that needs to persist beyond the lifecycle of a pod. Persistent storage allows you to attach and mount external storage systems to your pods, ensuring data durability and availability.

Setting Up Your First Cluster

Before diving into Kubernetes, there are a few prerequisites you need to have in place. First, you’ll need a set of machines or virtual machines that will act as your cluster nodes. These nodes should meet the minimum requirements in terms of CPU, memory, and disk space.

Once you have your nodes ready, you’ll need to install and configure Kubernetes. There are various installation methods available, including using a managed Kubernetes service, such as Google Kubernetes Engine (GKE) or Amazon Elastic Kubernetes Service (EKS), or setting up your own cluster using tools like kubeadm or kops.

After installing Kubernetes, you can create your first cluster using the appropriate command-line tools or graphical interfaces provided by your chosen installation method. Once your cluster is up and running, you can start deploying your applications using Kubernetes manifests, which are YAML files that define the desired state of your application.

Dedicated Full Stack Developers

Hiring Full Stack developers gives businesses access to pros proficient in various technologies and frameworks. Their versatility streamlines collaboration, leading to faster development and enhanced efficiency.

Kubernetes in Action: Deploying Applications

Deploying applications in Kubernetes involves creating deployment manifests that define the desired state of your application. These manifests specify details such as the container image to use, the number of replicas, and any required environment variables or volumes.

Once you have your deployment manifest ready, you can use the Kubernetes command-line tools or graphical interfaces to apply the manifest and create the necessary resources. Kubernetes will then create the specified number of replicas, ensuring that your application is running and accessible.

Scaling applications in Kubernetes is as simple as updating the number of replicas in your deployment manifest. You can scale up or down based on demand, ensuring that your application can handle varying levels of traffic. Kubernetes will automatically manage the creation or termination of pods to achieve the desired scale.

Managing application updates and rollbacks is also straightforward with Kubernetes. By updating the container image in your deployment manifest, Kubernetes will roll out the new version of your application gradually, ensuring zero downtime. If any issues arise, you can easily roll back to the previous version with a single command.

Kubernetes also offers self-healing mechanisms that ensure the availability and reliability of your applications. If a pod or node fails, Kubernetes will automatically restart or reschedule the affected containers to maintain the desired state. This built-in resilience helps minimize downtime and ensures that your applications are always up and running.

Would you like to get engaged with professional Specialists?

We are a software development team with extensive development experience in the Hybrid and Crossplatform Applications development space. Let’s discuss your needs and requirements to find your best fit.

Networking in Kubernetes

Networking is a crucial aspect of Kubernetes, as it enables communication between pods and services. Each pod in Kubernetes has its unique IP address, allowing other pods within the cluster to reach it. This pod-to-pod communication is essential for building distributed applications.

Services provide a stable network endpoint for accessing your applications. They act as an abstraction layer, allowing you to expose your application to the outside world or other pods within the cluster. Services can be of different types, such as ClusterIP, NodePort, or LoadBalancer, depending on your requirements.

Ingress controllers are another essential component for exposing your applications to the outside world. They act as a reverse proxy, routing incoming requests to the appropriate services based on rules defined in the ingress resources. Ingress controllers provide advanced features like SSL termination and traffic routing, making them ideal for managing external access to your applications.

Network policies in Kubernetes allow you to define rules that control the flow of network traffic within your cluster. This helps ensure that only authorized pods can communicate with each other and adds an extra layer of security to your applications. Network policies are particularly useful in multi-tenant environments or when dealing with sensitive data.

At its core, Kubernetes orchestrates containers, grouping them into logical units known as pods for efficient management and deployment on the cloud. It boasts a robust control plane responsible for maintaining the desired state and efficiency of container operations, making it indispensable in modern application development and deployment workflows.

Why Understanding Kubernetes Matters

Understanding Kubernetes is crucial for modern-day developers and platform engineers. By leveraging Kubernetes, you can enhance the resilience of your applications, enable automatic scaling, and efficiently manage resources across clusters of machines. Unlike traditional deployment tools like Docker Compose, Kubernetes operates seamlessly across multiple nodes, offering unparalleled scalability and flexibility.

Core Functionalities of Kubernetes

Clusters

Kubernetes clusters consist of nodes or virtual machines with uniform computing and memory resources running Kubernetes software. These clusters facilitate horizontal scaling, enabling the dynamic redistribution of pods across nodes to optimize resource utilization and ensure high availability.

Control Panel

The Kubernetes control panel, accessible via the kubectl command-line interface, empowers users to manage clusters with ease. From deploying applications to monitoring cluster states and managing configurations, the control panel serves as a central hub for Kubernetes operations.

Deploying an Application

Deploying applications on Kubernetes involves defining configurations in YAML format and using the kubectl apply command to deploy or update application states. Kubernetes offers robust support for specifying resource requirements, disk volumes, and access controls, streamlining the deployment process.

Access Control

Kubernetes implements role-based access control (RBAC), enabling granular control over cluster resources and namespace-level access permissions. With RBAC, you can define roles and role bindings to restrict user access and ensure secure multi-tenancy environments.

ConfigMaps and Secret Management

Kubernetes provides ConfigMaps and secrets for managing configuration data and sensitive information securely. ConfigMaps centralize application configurations, while secrets encode sensitive data, ensuring compliance and minimizing security risks.

Scheduler

The Kubernetes scheduler orchestrates pod placement based on resource availability and service level objectives (SLOs). By intelligently distributing pods across nodes, the scheduler optimizes resource utilization and maintains application availability in dynamic environments.

minikube

Minikube serves as a lightweight tool for running Kubernetes clusters locally, facilitating development and testing workflows. With Minikube, developers can prototype applications and simulate production environments without the overhead of cloud deployment.

Service

Kubernetes services enable seamless communication between application components, offering various networking options for internal and external access. Whether through cluster IPs, NodePorts, or external load balancers, Kubernetes services ensure reliable connectivity and efficient traffic distribution.

Persistent Volume

Kubernetes persistent volumes provide durable storage for applications, decoupling data from pod lifecycles and ensuring data persistence across container restarts. Cloud providers seamlessly manage persistent volumes, simplifying storage provisioning and management.

Understanding Kubernetes Networking

Kubernetes networking plays a pivotal role in facilitating communication between pods and services within clusters. By embracing pod networking, service networking, network policies, Ingress controllers, and Container Network Interface (CNI) plugins, Kubernetes offers a robust networking infrastructure for building scalable and secure applications.

Advanced Features and Practices

Once you’re familiar with the basics of Kubernetes, you can explore its advanced features and best practices. StatefulSets are ideal for running stateful applications that require stable network identities and persistent storage. They ensure that pods are created in a specific order and provide unique network identities and stable hostnames.

Helm charts are a popular way to package, deploy, and manage applications in Kubernetes. They provide a templating mechanism that allows you to define and customize your application’s configuration. Helm charts simplify the deployment process and make it easy to share and reuse application configurations.

Auto-scaling is another powerful feature of Kubernetes that allows you to automatically adjust the number of replicas based on resource utilization. Horizontal Pod Autoscaler (HPA) scales the number of pods based on CPU or memory metrics, ensuring optimal resource allocation. Cluster Autoscaler, on the other hand, scales the number of nodes in your cluster based on resource demands.

Monitoring and logging are crucial for gaining insights into the health and performance of your applications. Kubernetes provides various tools and best practices for monitoring your cluster and applications, such as Prometheus and Grafana. Logging can be done using tools like Elasticsearch and Fluentd, which collect and aggregate logs from your applications.

The Kubernetes Community and Ecosystem

The Kubernetes community is vibrant and active, with a wealth of resources available for learning and troubleshooting. The official Kubernetes documentation is an excellent starting point, providing comprehensive guides and tutorials. Online forums and communities, such as Stack Overflow and Reddit, are also great places to seek help and connect with fellow Kubernetes enthusiasts.

The Cloud Native Computing Foundation (CNCF) plays a significant role in the development and governance of Kubernetes. It hosts various projects that complement Kubernetes, such as Prometheus for monitoring and Fluentd for logging. The CNCF ecosystem provides a rich set of tools and technologies that enhance the Kubernetes experience.

Contributing to the Kubernetes community is highly encouraged, whether it’s through code contributions, documentation, or sharing your experiences. The Kubernetes project welcomes contributions from individuals and organizations alike. By getting involved, you can help shape the future of Kubernetes and contribute to the success of the cloud-native ecosystem.

Kubernetes’ Future and Trends

As Kubernetes continues to evolve, new features and innovations are constantly being introduced. Some of the upcoming trends include improved support for hybrid and multi-cloud environments, enhanced security features, and better integration with other cloud-native technologies.

Kubernetes is playing a crucial role in the adoption of cloud-native technologies, enabling organizations to build scalable and resilient applications. It provides a solid foundation for deploying and managing containerized workloads, making it easier to embrace microservices architectures and DevOps practices.

The future of deployment and orchestration lies in Kubernetes and its ecosystem. As more organizations adopt cloud-native technologies, Kubernetes will become even more prevalent. Its flexibility, scalability, and community support make it the go-to choice for modern application deployment.

Best Free Sources To Learn Kubernetes

| Category | Title & Link | Description |

|---|---|---|

| YouTube Video | Kubernetes Tutorial for Beginners [FULL COURSE in 4 Hours] | A comprehensive tutorial for beginners covering a wide range of Kubernetes topics. |

| YouTube Video | Learn Docker and Kubernetes – Free Hands-On Course | 6-hour course on freeCodeCamp.org providing hands-on experience from basics to advanced topics. |

| Web Page | Free OpenShift tutorials and labs from Red Hat | Hands-on OpenShift missions and playground for developers to learn and experiment with cloud-native technologies. |

| Web Page | School of Kubernetes – 100% Free Kubernetes Courses | Free courses covering Kubernetes basics to advanced topics, including writing Kubernetes operators from scratch. |

Learn Kubernetes Cheatsheet Guide

Download our free guide to Kubernetes Learning.

Kubernetes is a game-changer in the world of software development. Its ability to automate the deployment and management of containerized applications has revolutionized the industry. By understanding its core concepts, setting up your first cluster, and exploring its advanced features, you can unlock the full potential of Kubernetes and take your applications to the next level.

Starting your Kubernetes journey may seem daunting at first, but with the right resources and a willingness to learn, you’ll soon become proficient in this powerful technology. So, dive deeper, explore the vast Kubernetes ecosystem, and join the thriving community of developers and operators who are shaping the future of deployment and orchestration.